“Thin is the line between a hero and a memory” – Optimus Prime

Still yet to find a quote about transformer AI when looking up transformer quotes. Also, that quote feels like it could actually be about the transformer, which does not have memory circuits (as standard, as described in the original paper).

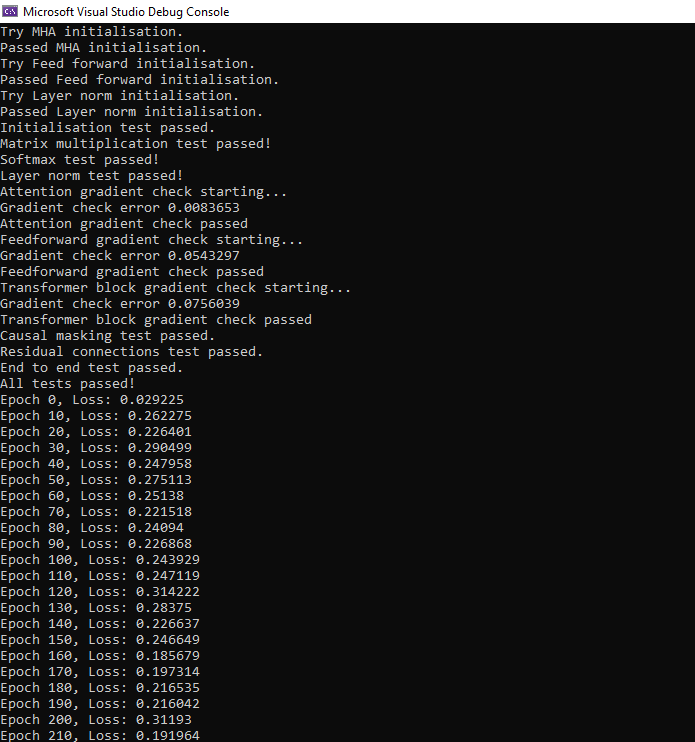

I finished testing

So I am using this as a learning exercise, and conversely, that means I am making many mistakes and flittering back to vibe coding to understand the concepts I am fuzzy on. Therefore, as all things vibe-coded, I am having to spend a lot more time on tests than I am on writing code.

I ended up building asserts all over the place and a testing class that is setup to run a barrage of tests. It now prints out to the screen the tests that check the gradient produced is within acceptable limits and that the shape of all matrices is consistent.

Currently, the gradient is randomly generated, so the printed loss is nonsense, and I have to build all the training “bits” to get this far.

The gradient is a bit high, ideally it should be below 1e-1 (around 1-e2) at the start at 1e-6 after training. It is an area that I could do with learning a bit more and in some places I have looked at doing a gradient assessment at a layer by layer level instead of an object level to look at where gradient explodes or vanishes; this is to say lots of numbers multiplying each other tends to either create very big or very small numbers very quickly and obviously I want to find a middle ground in that.

I did implement leaky ReLu (if an output les than zero, it previously zeroes the output but now multiplies it by 0.001) and that did help but I thought I would not get too hung up on it as it is a starting point. Being super small and an initial test does tend to mean that the gradient gets driven up, and I expect it to be much smaller with the larger models that will look to train. Though it is something where will need to look to implement some data gathering processes to watch out for that.

I am not going to share code for this as it's almost identical

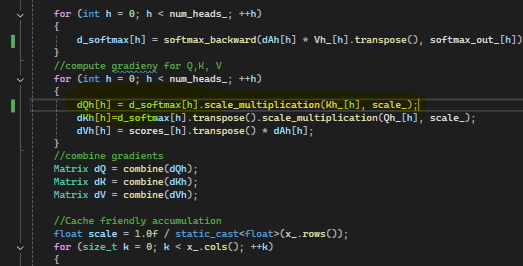

The one exception is that I found a bug (just one) from a week of testing. Previously, I was transposing Kh_ before multiplying by d_softmax, but doing that will mean sometimes the attention head will have the wrong dimensions.

! week to find one bug which would have silently killed the project when tried to enlarge the scale.

I spotted it independently first because parts were vibe coded, I wasn't too willing, and I ummed or ahhed over the week, looking for every other possible answer and running lots of tests before that became the obvious answer. When I went back to the AI that generated that part of the code and worked it through the answer and why it was wrong, it was really weird. It produced about a page of it talking to itself, saying it must be right and kept switching between before giving up and saying I must be right.

It is satisfying after all that doubt going oh OK I might be learning as I go, but I am getting what is happening inside this thing.

Really eerie reading an AI talk to itself and doubt itself...

Finally, after 1 week of effort, I got all the tests passed, all the scaling right and its in the ballpark of being the complete maths behind the transformer with correct gradients. Now, I just need to do the rest to make it into something usable and trainable.

Project Plan

So the intention is to build end to end a chat bot in C++ to make sure we have all the knowledge about AI down. I prefer to be practical, so learning by doing like this helps me learn.

Taken inventory of all the parts that need to be built and how long to deliver the remaining parts.

A transformer model: mostly built the different parts are all tested as per the above. What I should do next is encapsulate it so that the stuff I do in Main now is all in a class that manages all the parts and lets you build layers into it.

Timeline: 1-2 weeks (want to try and do it in a week). Though the reason it might take longer is that the new object would be another maths-heavy structure, and as above, I have to then build testing and asserts and possibly look at if the gradient explodes or vanishes with a larger model with more layers (this has a low chance of being a bit more of a problem but I think what will happen is I'll build and it will just be test as I go).

Low estimate 1, week high 2

A tokeniser to convert text to a numerical representation. In text you have words and the model treats those words as a probability distribution represented by the softmax output. When you want to start feeding in the data, you need to be able to take text and split it up and map that text to inputs and outputs.

There are different types of Tokenisers: white space ones just split up words on spaces and new lines within the data. This is very fast but will handle punctuation and emojis badly. You can then use REGEX or even byte level encodings. Byte-level encodings are increasingly used in large language models.

Timeline 1 week

Low estimate 2, week high 3

A training pipeline to train on conversational data. I probably need something that stores the data for this and something that converts it into that storage design. Will need to build a loss function (and research them).

Need to look at training data. Look for a custom or get an existing dataset for the Cornell movie dialogues corpus, Microsoft social media conversation corpus. Reddit comments. 4Chan for a super spicy offensive chatbot anybody? The last one 4Chan is a joke, but I do wonder if we build chatbots and try to make them sanitised well, what would happen with a super offensive one?

I think a custom chatbot integrated into my wholly random game generator could be trained on the script data from Cornel and could write scripts for the game and then would have a wholly random traditional roguelike game generator with plot, etc., etc. I think that would be fun

I feel this will be the biggest part and will probably spend some time building different visualisations and data systems here.

Timeline: 1 week, another week (maybe two) for building visualisations. Try and setup initial training in parallel, but if you have to do it consecutively may take a further week.

Low estimate 3, week high 6

An inference pipeline. To generate responses. Also process for saving and loading a model look at sampling strategies. Might need to look at temperature and stuff at this point within the model.

Timeline 1 week.

Low estimate 3, week high 7

A user interface that is currently a command line but could be scaled up by wrapping it in an API or mobile app. This should be the final orchestration step. Would need to look up different deployment options. I feel half way through I will have figured out a use for it, so I am leaving it here mostly.

Time line 1 week (possibly more if I have decided I want a specific interface like a website setup).

Low estimate 4, weeks high 9

Optimisation/Debugging?: It might be worth it at this point doing a look over what could make the system faster. I have just followed normal coding processes but things like helper functions. Possibly looking for places where using double loops and not needed, etc.

Also I have already learned a lot about methods of keeping the gradient lower from implementing the gradient tests above so I could see some tuning and looking at how that error is back prop to improve the model. Could get quite into the weeds looking at gradient propagation at a per layer level and looking to check no spikes.

Low estimate 5 weeks, high 12

Follow up enhancements (post completion): add context and memory. Look at handling multi-turn conversations. Look at the database and knowledge base connections to provide factual answers.

Summary of papers I am pulling ideas from

I am trying to pull a paper and read it each week to increase my knowledge.

Attention is all you need 2017 paper: Has a great encoder and decoder diagram, of which I am a fair way a way from recreating in full. Every time I learn some aspect of AI, this paper makes more sense. Replaces recurrence and convolution with pure attention. This greatly reduced the training time compared to RNN (Recurrent Neural Networks). The process computes the relationship between all the tokens at the same time. Adds positional embedding to represent word order in the tokens. Uses layer norm and residual connections to allow

Created GPT models, BERT and its variants. Vision transformers. Multimodal generative models.

Reading and rereading this paper has made me realise I had not properly understood residual connections. In my head, they were very much like recurrent connections, but only a small number of them, to limit the exploding gradient by only having a “little recurrence”. In my head, that is what they were doing, and I thought that was how the system works in a vague its like an RNN but better and smarter, but maybe light on the details and maybe this made an attention head a little like a partial differential equation; I really get that it's not that now.

Residual connections: solve several problems in deep learning by taking an input to the attention head and adding it back, such that output = X + Function(X) (function means the output of the rest of the multi-headed system). This protects against vanishing and exploding gradients from vanilla deep learning because the error back propagated has this other route that goes all the way back to the original input. This preserves the original information and enables deeper transformer neural networks because in a normal deep learning model, the error back propagated might drop too low.

Residual connections appear both in the feed forward and attention as well. So I learned something from this project already.

Closing Thoughts

So hoping to have end to end code base for trainning a chatbot in C++. Will then have my own chatbot project, which I think I will try out in a few other projects.

Add comment

Comments