"Do not let what you cannot do interfere with what you can do" - John Wooden

Introduction

This is my weird software development blog.

I have been building a biologically plausible neural network that mimics the biological brain. It works using neuroplasticity rules and builds itself.

I had a issue where I realised I had overestimated its accuracy.

Back tracking and retractions

First I had the slight embarrassment of discovering I had over inflated the accuracy of reported model. When it first worked for speed I had it pickup the test to be as below. It should have been <= not < but I had not considered this. If nothing else was higher than maxi it would assume it got it right and output true.

Elsewhere in the code and outside the model it checks that at least one neurone has some positive activity (they can be negative or positive in their output). So I know that the network is still doing something each step and so had not thought to check that this firing might not be at a output or be so small as to be equal across all outputs.

The network has multiple outputs 1 for each letter or number in the alphabet and the highest is treated as the AIs choice at any timestep.

So information was cycling inside but not having an affect on the outputs. Or the affect was equal across all but it would output that it got a right answer. This inflated accuracy. I had not considered output at all outputs could be zero because there is so many of them.

The point I realised my mistake is when I did some things to the network that killed all activity and it told me it was really accurate.

So I had to revise accuracy down and considered I had just messed it up. Though upon further testing I managed to get some successes where the AI would be accurate more than once in a row. The below output 777 and 111 is genuine accuracy.

I changed my testing protocols so where this happens in the new testing protocol it is given a -0.5 (values should be zero cantered but between -1 and 1 so its a fairly high penalty). If another value is higher the score is the wrong value certainty minus the right value. If the model is right it is scored equal to how certain it is in the correct answer.

Therefore to get a better score it just needs to be continuously firing with no breaks at its output points, less certain about the wrong answers and more about the right. I am doing that because I cannot afford to test big models that might be right and so intend to test a lot of very small AI I know will unlikely to have capacity to learn properly but nonetheless if I can show improvements that im0provements is hoped to translate when I scale up.

Alongside the neuroplasticity rules you should get to a point where you have a very detailed brain emulation that will build its own architecture while learning, has memory, learns timing and "thinking" like processes. A caveat here is that it could satisfy that by being on a very small bit but that should not happen because of its nature as a "spiking" neural network though above already shows what happens when I think something "should" or "should not" happen.

In my defence that's still a AI thinking I can still hang my hat on the it's a thinking machine part.

Restart And Recalibrate

I still want to build a biologically plausible neural network and there still seems room to grow so despite the falling flat on my face the fundamental mission seems valid. Build a brain emulation and study its use as a AI.

I then heavily curtailed the AI size to be able to rattle of tests of reading through a whole book and have been running comparisons of the models to detect what small changes to the algorithm do in terms of reducing error.

I restarted but then moved onto using a data mining tool that I wrote to identify changes I could make to the chemical composition of the neurone as it learned to improve its operations within the neural network. This ran it would update the neurone normally then run a simulation of the previous timestep as if its own chemicals had been changed and cross referenced that against timing, pacing and the build up the chemicals in the cell.

My hypothesis is that in the biological brain the cell leaks these chemicals and learning takes places because Glial cells, neural transmitters opening cell ion channels change the internal composition within that cell. This changes the exact timing that firing takes place and the curve and modulation of the differential equation that underpins that cell.

Usually this would just break the cell but this mix seems stable. Also with the new testing protocol I do know it is firing at its output site and lasting longer.

This then printed out recommendations and I applied them I have run them side by side it reduces standard error T-statistic:-1.5577438290555212, has a P-value:0.12535712775940902 (chance of being wrong or new tests seriously changing the distribution is 12%). So that would look to say that I am close but need more data to confirm as really I need to get it down to 5%.

I need to spend some time trying different combinations and safety controls on it. Though I am back to being productive again. I have just started testing for upgrading the neuroplasticity algorithm.

Left was original right is new one. The right also looks to improve with multiple epochs of trainning which was surprising. Though the sample size is tiny currently so that might reverse.

Network Analysis

A aspect of these biological simulations is they really do rewrite themselves. Individual neurones will using rules derived from biology prune and add other neurones. A neurone can even sort of migrate around until it finds the right spot. I really have little or no understanding on what the networks they produce are like.

I have not done a plethora of network or graph analysis in the past.

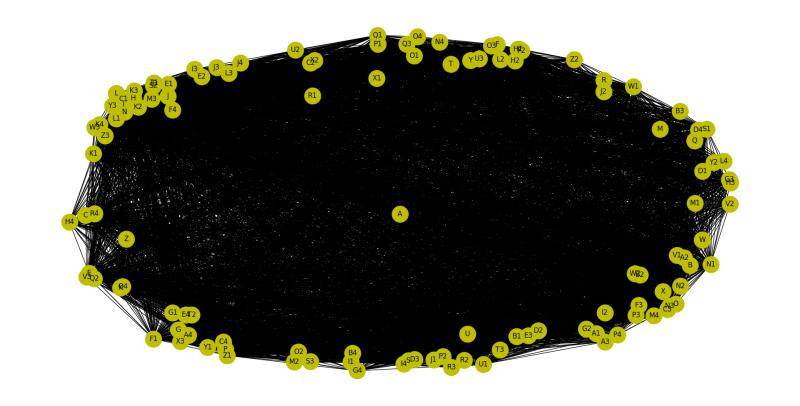

I started network analysis and found NetworkX 2d network visualisations pretty good. It has been a bit perplexing because A) on 4 different tests each are slightly different B) they all have 2 communities with one community having 1 member C) on all measures of network analysis there are two groups one group highly close and other highly between D) they mostly lo0ok like a Hopfield network except where like below some areas are closer than others and strangely 1 highly centralised node in the network.

I felt I did not expect them to be this densely connected and if it was that I would just say it was "Hopfield like" but it does have distinct values of closeness and betweenness in the population so despite being so dense and too dense to see them the data says there are distinct pathways and preferred in the network and it probably has some form of different areas of its "brain" doing different things.

You can see the chats below. That was a bit strange very brain like for a little toy model.

Conclusion

So it still stings about the mistake on accuracy I think what matters is whether it actually works and is reducing accuracy and I think I can point to that so I think it should continue doing it.

I used to post this blog on LinkedIn and I think I should stop that because this is kind of a very experimental trial and error process. I wanted to test strange AI and stuff and previously I put it there as this was a portfolio for my data science/data analytic skills. Given that I am being a bit experimental that is likely to showcase my mistakes as well.

I have also looked at going back to university and even at the PHD level I cannot find something that is looking at just getting on with things and emulating the brain and largely the statistical model of AI is in dominance even in universities. Therefore my interest in this is clearly very niche, probably unlikely to get me a job so it seems unproductive to put on LinkedIn.

I probably could not get into a PHD course if I tried so their existence or not is irrelevant.

All in all I think I am just going to put this on a go slow. Focus on the wholly random game and just enjoy stuff a bit more. I still want to investigate this AI model but I want to curtail putting odd software development on LinkedIn as I'm uncertain if it's particularly forms a portfolio or it's just documenting my frustrations with a specific AI design.

Add comment

Comments