“Let’s talk about Minimum Viable Product, or as I like to call it: the art of building just enough to find out you’re wrong.”

― Scott M. Graffius, Agile Protocol: The Transformation Ultimatum

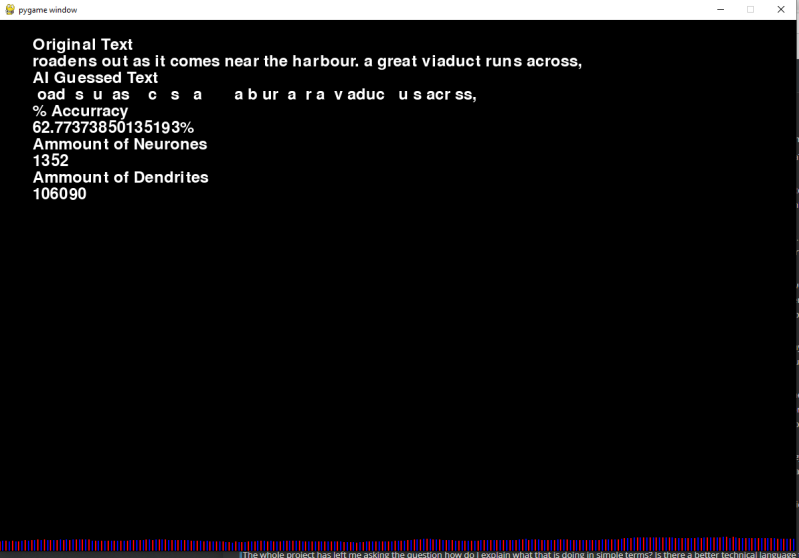

I have my minimum viable product of a brain simulation video below. Yes as per the quote I found out I was wrong about some things. But this is meant to be a brain or a algorithm at least like that so of course it would endanger more questions than answers.

I also have to explain what I just said because sometimes people come to this blog for the first time. Hello I am Joe this is a blog about doing weird software development and specifically I started out wargaming how to simulate a human brain and have slowly expanded its scope till I started to implement that exact thing.

I took heavy inspiration from the Blue Brain project for this. Queue a little research I found that real true AI was a little more real in the blue brain project but someone really ought to try making it a bity better versus that transformer thing.

My personal reasons for doing so is I grew up with AI in movies and was kind of disappointed to find out as implemented would never say "I'm sorry Dave, I am afraid I cannot do that"; because it really lacked a host of features to have the intent because of lacking thinking skills and the competencies to be murderous.

3 Is the Magic Number

3rd test will create a stable network wholly out of neural plastic processes rearranging neurones and their connections in a biologically plausible process.

It has the benefit that I can just spawn in new neurones in the best places that help the network. Also like us because its all differential equations it has timing and internal flows meaning its closer to being a thinking machine than just a mapping of input to output its able to eerily map things it does not even have inputs from.

Video proof...

Demo Tape

Brain Simulation Test Demo - Part 3

Spooky huh...

That sounds like a big deal; maybe it is but if you stop and think about it then the way we talk, work and do is entirely from memory and not from inputs.

You only think that because the sort of AI we are used to are input output mappers and are not mapping timings or have internal processes that ordinary differential equations might offer.

If we say AI has to be very liquid like our biological brains are we would hopefully get a lot of memory to work with. I then want to see if I can beat other language models using something like this.

Beats the Transformer

My target now is to beat the transformer my research on how quickly a transformer learns is like this.

- In the first pass a transformer is about zero accurate

- It takes multiple passes and might only gain 0.05% accuracy after first training.

- Even fully trained a process that takes multiple passes modern models are 30-50% accurate on next token guessing

- This AI shows learning from inception and you can see it is much faster to grok what is going on.

- This test is to show rapid learning in the model on something “thinking” with no inputs if it gets better than 30-50% accuracy from first training then in theory should be applicable to use it in language tasks

Ok so my work got 60-75% is already beating the transformer. That does not mean it would be useful to go and deploy it as a agent tomorrow. But I think it might be applicable to that area.

What is impressive is that it wrote itself under neural plasticity. No architecture just graft in the new neurones and let it arrange itself.

I think you can see in the video there is enough there to continue testing this out as a alternative to the transformer and or it might have other uses.

I also really liked building the EEG at the bottom which I think will need to move up and have red dropping down and blue gioing up but it is showing the spiking neural network I built really is spiking.

If you think blue is going up and red is going down it sort of resembles the machine doing gamma or beta with rapid fluctuations. Ideally I should move that line up and blue should go up and red should go down but the idea is to show it is rapidly spiking and oscillating.

You can see a picture below showing the types of brain waves. In our brain high levels of thinking is characterised by rapid increases of voltage followed with rapid reductions. It looks like rapid oscillations.

For this AI you can see it flips between blue and red rapidly showing rapid internal voltage oscillations.

In this AI you can see neither the output nor the fluctuations are uniform which if you stop and think about that those outputs are mathematically generated you can see why it is had to create something that spikes up and down like that and encodes for some form of meaning.

That is the brain waves associated with thinking hence the claim for this code to be a thinking machine is quite fun and viable claim.

I think I made a machine think. You can think otherwise...

I broke theory

I have been struggling how to explain this project to people. In some sense I am trying to use differential equations to mock up a thinking machine.

Now if you have a problem with what I just said about machines and thinking then your not alone I even really struggle to explain this myself.

The problem is that in that is a self referencing, self criticising loop that is definitely recurrent but when you do it on this scale is that qualia? Is that concious? What does that have to do with self awareness?

It makes sense that during training the training itself is changing the artificial neural transmitters in the AI and that the AI uses that simulated changes of liquid pressures and where those simulated liquids are in its simulated head to predict the next event and thus manages the things you see in the video.

But that implies thinking and other stuff which you are not meant to say when you are working with AI. It implies a state of Qualia possibly. You start to use words like consciousness. Then you realise what your saying and try to slip back to using phrases like Hodgkin and Huxley model, ordinary differential equations, concurrency of inputs and outputs in equations.

If I was to try and sanitise language for this I would say that during training amplitude changes occur in points of the network and the network converges on these amplitude changes. Though then I just implied it was working because something like a Quantum walk and I just implemented it on a classical computer and it learned fast because of a quadratic speed up. It is a explanation in my head that exists as a working theory but I suspect If I told that many physicists would laugh.

It is also probably techno babble for training makes neurone wobble a bit and now you know why that physicist is laughing at that being my big theory for machine consciousness. Though it can still be talked about in that sense because it is reliant on energy levels and simulates a number of physical changes which in turn changes the amplitude of a neurones output.

So yes I just said something about quantum on a classic computer but it's a good analogy to explain what I think is going on.

Does that make sense? No but that is my point I built a AI trying to emulate the biological norms more closely and my language has become a bit broken because of it. I would say the simulated changes in liquid pressure in the AI are analogous to changes in the human brain allows for "thinking" to be better implemented in this network than the transformer but largely there is not really unified explanation for why that would work or why the brain does much of what t does compared to regular Relu transformer AI.

A big weakness I have found is that really when googling universities on this subject I have really found very little degrees or even PHDs working in this space. They are usually working on the transformer architecture, or using these processes but for small simulations of neural tissue and not for practical tasks or as a genuine branch of AI research.

So I have a problem I could explain what I think the AI is doing as a anecdote, a philosophy question about thinking, as a biological simulation, as a AI neural network or a physics experiment. Clearly all these are not great ways to talk about this AI.

The whole project has left me asking the question how do I explain what that is doing in simple terms? Is there a better technical language that describes this? How would I explain what this is to different audiences? Could I make this simple enough to explain to my mum?

You see the problem? As soon as you talk about "thinking" there is a lot of extra baggage. It is easier when AI is just mapping input tokens to output tokens. Truth be told I just copied biology as much as possible... I probably do not fully understand what it is doing...

More Work Needed

it looks better if you only watch the start the ability to spawn in neurones where I want and connect where the network is weakest is really great. If you watch the video you can see that it blows past the target while using that process. It then starts lowering its accuracy.

It raises the question for me what causes it to fall back? Is it capacity do you need a certain size. I have a host of things I can fix in code and a experimental process to compare two AI and pick which is better statistically.

I want to look to find actual real world places to try and use this weird AI design that I have built. If you think of one let me know (I may charge). I probably will start to look at scaling and different architectures for this one.

Conclusion

So I am going to claim that a minimum viable product; a minimum viable consciousness if you will? (too much?). Given that I started this process knowing nothing and essentially just war gaming on how you might proceed to build AGI or ASI and got to this point.

With no inputs it probably should not work at all as most of our AI thinking is focused on mapping inputs to outputs as soon as we start talking about AI doing something like "thinking"; It is a philosophical minefield. I already have fell out with someone who I think thinks I am having them on. The problem is I do not blame them; even as unfortunately I am not having them on. The language gets very confusing.

The thing I have found interesting with all this is things like the Hodgkin and Huxley models come from the 1960s, this model is a ordinary differential equation model a phrase that dates back to a 2018-2019 paper. Historically AI was this instead it's been redefined to almost only be Gen AI and within Gen AI a specific architecture the transformer and within that a specific set of suppliers and businesses.

The problem is the transformer, agents and the like have sort of completely taken over the world right now and approaches like this which seeks a higher degree of emulation seem to have been forgotten about to the point where AI is a set of linear algebra equations to most people; even though our brain uses processes better modelled as differential equations.

Online there will be a influencer claiming they took advice from a set of linear algebra equations in a transformer? Or even stranger despite knowing it has no intrinsic memory that it is acting as a agent for them?

I have found it really frustrating that AI today is the transformer. I think the transformer is a amazing piece of technology but it is sad that we grew up watching sci-fi about machines that could think and transhumanism for people to be told to get AGI all you needed was sufficient matrix calculations and attention mechanism.

In doing this project with differential equations it really has helped broaden my horizons and think about AI more broadly.

Despite being down about transformers I am feeling a bit bullish about the future for AI; the other day I started thinking hey how hard would it be to try copying a brain onto that computer. You can see it did not need inputs well I wonder if I can sync it up with a human brain. That would be a quick way to find out if I got the code right. I did take certain liberties with the maths but I am sure that will be fine...

Add comment

Comments