"The idea that the brain can change it's own structure and function through thought and activity is I believe, the most important alteration in our view of the brain since we first sketched out it's basic anatomy and the workings of its basic component the neurone" - Norman Doidge

I have been building a brain simulation and doing so to talk about what sort of technical hurdles exist and would need to be overcome to have AGI and ASI by comparing what we know about AI and the brain.

Last week I looked into the different learning capabilities that the brain possessed which AI did not. I had been going through each of these learning mechanisms and trying to apply at least a crude approximation of it to a Hodgkin and Huxley model simulation.

The Hodgkin and Huxley model is a set of maths used to predict the behaviour of biological neurones. These maths are differential equations that describe spike neurone like ours where the neurone spikes on activation. These spikes in activation are followed by periods when the neurone cannot fire and differ from the types of maths we use to build the more common variety of artificial neural networks.

This week I want to delve into these capabilities and work through optimising each one and talking about it in detail. I am going to start with my favourite. Neural plasticity which is the way the brain can grow new synapses between different neurones and remove connections that do not contribute to its own firing. In essence the brain is always rearchitecting itself from the ground up.

My research says that this happens when a neurone has too high amount of calcium ions it will stimulate it to grow new synapses usually preferring other neurones which also have high calcium. Likewise when its calcium is too low it will start pruning and releasing enzymes to remove synapse connections to other neurones starting with the neurones which also have the lowest calcium ions.

So I just setup a test where the network would require itself when calcium ion was above a certain arbitrary threshold and would start to prune itself when below. This was to start looking at how neural plasticity might work in the mammalian brain and how that might contribute to AI in the future.

Because something that our AI cannot do currently is rewrite or rebuild themselves and I wondered if one day they could?

That as they learned could they mimic biological processes for when and what other neurones in the AI to reach out and connect with?

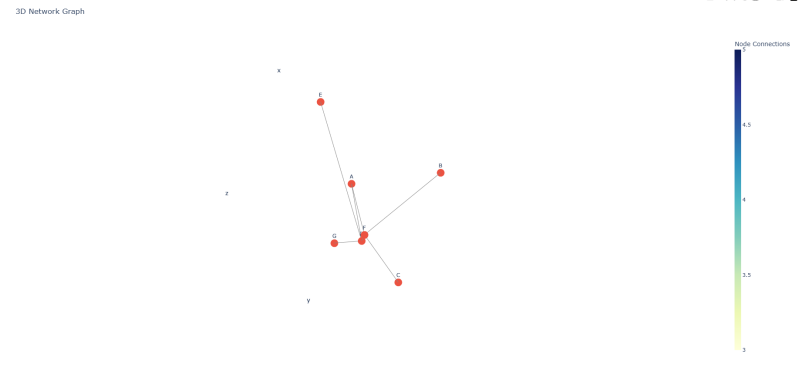

These connections create interesting networks and this is my first attempt at trying to intergrade that process into a AI simulation.

Visualising and hypothesis

If you apply these rules for connecting neurones then when a neurones content of calcium ions is too high and pruning when too low then we can start to guess what is going on; calcium is something that has timing going up and down with the firing of a given neurone. It is only logical that the parts of the brain should start to sync up in terms of timing.

If as a Neurone is really high in calcium then it is at the start of it's firing cycle just when it is depolarising and therefore seeking to connect with other neurones that also fire at around the same time you yourself are firing there is a higher likelihood that neurones that fire at the same time as you will contribute meaningful information to yourself.

This also seems a good predictor of the Hebbian learning which is a rule of thumb of how brains work; "that neurones that fire together wire together". It makes sense that neuroplasticity would try to link up the parts of the brain that share the same timing.

It is also more likely that if you are very low on calcium then removing connections that are also low on that chemical would also be likely to remove neurones that have too high a similarity in firing patterns to yourself to significantly change your firing. Ie a neurone wants other neurones that will reenergise it and fire just before it (so as to stimulate itself to fire) but not exactly the same time which would be redundant.

If you apply these two rules then the brain ought to be learning timing processes by managing neurones into wiring's where they are set to fire at similar timing.

My theory is that the Neurones should self arrange themselves in firing patterns that encode timing behaviours. I have not decided on a test for this but let us see what patterns this process will create. I used python libraries Networkx and plotly to create 3d graphs of the way the networks formed

It's a small world network after all

I believe and think the visualisations show that these affects tend to build models that look like small world networks.

A small-world network is a type of graph in which most nodes are not neighbors of one another, but most nodes can be reached from every other node by a small number of steps. This concept is often referred to as the "six degrees of separation," where any two individuals in a social network can be connected through a short chain of acquaintances.

Small-world networks exhibit two key properties:

1. High Clustering: Nodes in the network tend to form tightly-knit groups characterized by a high density of connections. This means that if node A is connected to node B and node B is connected to node C, there is a high probability that node A is also connected to node C.

2.Short Average Path Length: Despite the high clustering, the average distance between any two nodes in the network is relatively short. This means that you can travel from one node to any other node in the network through a small number of steps.

Small-world networks are prevalent in various domains, including social networks, the World Wide Web, and biological networks like neural networks in the brain. The structure of small-world networks allows for efficient information transfer and robustness against random failures.

It feels obvious that a brain would network itself like that so any decision made at any sub level or sun network in the brain could be propogated within a short number of steps into every part of the network. It is interesting that a simple application of this calcium rule does look to build something similar.

Apart from the visual similarity I have not started measuring that similarity or max number of steps between any location in the graph.

You can see even at a small scale they form interesting shapes. These shapes often involve lines that form bidirectional transmission I.e. most of the black lines are the neurons connected both forward and backwards so when it fires it stimulates the target neurone and then would receive feedback from the other direction.

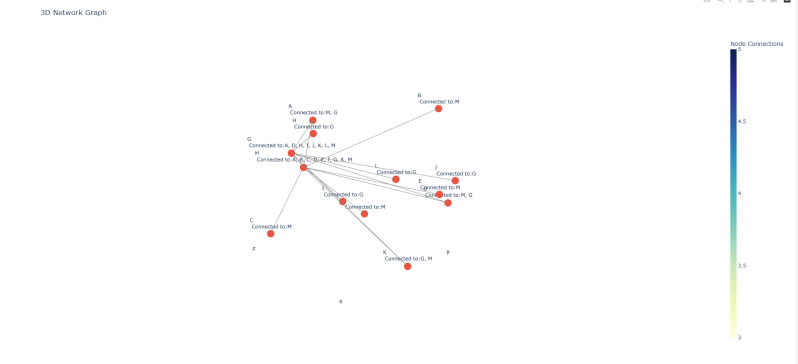

If i add in markers to list what it connects too you can see that a small number of Neurones have much higher levels of connectivity and surrounding satellite neurones which have weak connections but also connect directly back to the neurones so that information goes back and forth between the two neurones. I think this is because this helps manage timing and oscillations such that when a neurone fires it will pass to these lightly connected neurone which will in turn fire and whose timing will influence the original neurones timings.

As the size gets bigger the number of central points increase.

Though to me there is a tendency to have 1-2 central points that are highly connected and a range of these satellite osiclators.

You can see that there always seems to be a clear central mass of the neurones.

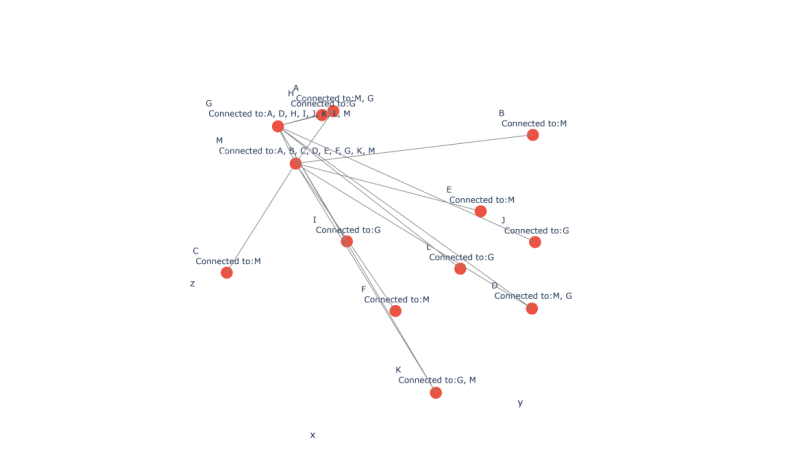

Trying a bit bigger.

Trying a bit bigger.

Trying a bit bigger. I find it interesting that each time scale up it always has a central mass. I do have even bigger scale up but they always have one highly connected central mass of neurones all often heavily connected to each other.

I do need to come back to it later and start identifying what part of the networks do what but I think it was a good initial outcome for applying such simple rules.

Looking at a big one in a bit more detail

So I still have a lot of scaling but you can see when I identify a central point there is a larger sub section of lots of neurones with very strong connections.

The above has lots of strong connections but there are then a second hub which has say two connections. This seems to happen more with each scale up but is not always present.

Initial Testing

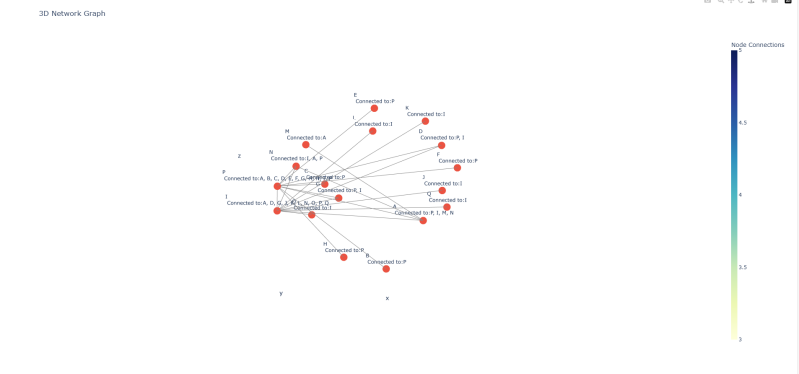

Initial testing seems to show if you just wire them together they form interesting patterns, and the number of both connections and pruning goes up the more neurones are involved.

The amount of connection growth is lightly non linear increasing slightly faster and the amount of pruning seems linear. Ie it gets exponentially bigger as more neurone simulations are added.

Mostly I have seen it tends to have one major mega connection site with lots of connections and then a limited number of neurones in highly sparse setup of maybe only 1 or two connections. Though appearance of clusters that have more does seem to increase in time.

Researchers say these form 11 dimensional spaces in the brain

Im not joking references at the bottom. Ok but to think why scientists say this.

The blue brain project found that the brain forms 11 dimensions matrix of connections. Now this is a odd thing to say as typically you can see Neural Networks as a graph network and typically you do not use the term dimensions but connections, edges and leaf's. It is true that neural networks do math in a higher dimension than we think of because when seen as a graph, or linear algebra it is producing a dot product between multiple vectors.

There are two things I want to say about this one is it seems really confusing language. I think what is meant here is in actuality the brain is doing maths of which is like multiple vectors being factored together and is a bit beyond our normal ability to visualise. That in some sense our brains has 11 or so main subnetworks all involved in its decision making. Though conversely there are people now claiming it as proof we think in "higher dimensions".

Though this high dimensionality of the brain ought to imply a few things. Neurones ought to be connected in clusters with a lot of exchange of information between those groups. Saying the brain has 11 dimensions might be really messy language but it does imply something that should say information ought to be flowing through that system in at least 11 distinct directions and that ought to be a indicator of the complexity of the maths we are dealing with.

It seems very strange to me that they choose to use the term dimension and the internet has now been filled with articles about how our brains might be multidimensional. I think it would be easier if they had said the brain has at least 11 distinct systems, modalities, or information flows but they did say dimensions.

It is not the first time this concept has been used it comes up within the literature I'd be interested to know if neuroplasticity on its own might explain that and whether the brain, it's shape and everything about it arises from much more simple rules like the ones I applied here or is it finely designed and encoded in DNA (probably a bit of both). I mean you can't tell me I'm completely wrong as my simple rules seem to at least produce one or more central hub in each instance.

If simple rules can produce a small world network out of a simulated neurones own internal rhythms well maybe the brain similarly arises from simple rules.

I think I need to do a test to see if average density of connections increases with thw size of a network when applying my plasticity rules. Maybe I will get that 11 dimension network...

Conclusion

I felt pleased that worked much at all. The rules were very simple but seem to work and while I need to build a set of tests to optimise the process and work out what it is doing I feel pleased the process does connect neurones into bunches at all. I half expected to find it would fragment the network.

I had to leave aside performance testing for this but I think it's very powerful just to see it working. Anecdotally it does seem better to have it working but when I initially applied it network performance dropped and then I improved it over a series of refinements it might be giving me a 1-2 standard errors of improvements.

This is all to say having found it works I still probably need to see how to make it work well.

I think if this was made to work well it could allow AI to self architect and build the networks from its own learning. Though this is reliant on using these type of neurone simulations and a lot of our success with AI has been down to the sophistication of architectural engineering such that the transformer model is not well designed at the neurone level but at the design of its attention head system. The issue with AI that could change shape during training might not be desirable as could add extra compute without really adding more than is taken away from designing the right architecture.

Because surely you don't constantly need to redesign a AI but only design it once?

The shape of the linear algebra of a transformer not only helps with the transformer architecture which relies of dedicated attention heads for its function; but constant changes to the shape would also change the linear algebra internal meaning most AI acceleration we have developed would not work.

Its a fascinating idea to have AI that just simulate the brain but it might be impractical for well practical uses due to the loss of benefits from AI acceleration.

It was a lot just getting this all to work so I think I will need to do more testing in future weeks to see the affects. Though it is a startling idea that in the future neuroplasticity algorithms might allow AI to adapt themselves while training. This adaptation looks to me to be small world networks that are widely thought to be amongst the best ways of handling information in a network.

It is interesting that it worked at all you would not expect such a simple rule to start building models like this. I probably need more time to know if they are effective but they do seem to aid the network in learning which is surprising as I would have thought constantly changing the network design would be damaging unless each transformation is beneficial.

I do not know how you would start to prove that it also encodes certain timing processes into these simulated brains but maybe the satellite dual way neurones hints that is what is going on. I have not done too many tests on this but currently it looks to do most of its changes at the start of its training then settle down and stabilise. That leads me wondering what exactly is something like neural plasticity encoding or stabilising towards? Usually in machine learning we would have in some sense a entropy or error that is being reduced down until it is a stable state is reached; but its not clear precisely what the model is changing into with these calcium based rules?

Is discordance being reduced? It's worth a thought that it's not very clear if neural plasticity is like a form of machine learning or is it just the network runs better by linking together all the different neurones that fire around the same time.

The way the brain uses neural plasticity feels like the big thing cut out or traditional ANN that our brains do but we don't understand very well and pretend does not exist when designing AI. Because by implication what our brain is doing is a order of magnitude more complex than what chat GPT does in that it learns both the timings and appears according to neural plasticity to some degree learn it's very design and architecture from what it's learning.

What else but the brain is in some sense self designed?

Modern AI often labelled as moving towards AGI status does none of these things.

The highest level of connectivity I found was 23 connections with one Neurone; surely if the internet can seize upon the blue brain project as proof the brain operates in 11 dimensions well mine works in the 23rd dimension...

Yes I know I'm using the term dimension wrong but then so is everyone else when talking about neural networks.

References

Small world networks

Small-world network - Wikipedia

There is a multi dimensional universe in your brain...supposedly..

Blue Brain Team Discovers a Multi-Dimensional Universe in Brain Networks - Neuroscience News

11 dimensions

The human brain builds structures in 11 dimensions, discover scientists - Big Think

Add comment

Comments