"The best thing about memories is making them" - that commercial your bound to forget

I have been working on trying to build some useful compute out of the Hodgkin and Huxley model for the human neurone. It is a bit of a deep dive to highlight the differences between the human brain and artificial neural networks and try and learn some things along the way.

The Hodgkin-Huxley model describes how action potentials are initiated and propagated in neurons. It explains how the movement of ions (sodium and potassium) through ion channels in the neuron membrane creates the electrical signals (action potentials). The model uses mathematical equations to represent the flow of these ions and their impact on the membrane potential.

I am using that as a model have extended it to include a calcium ion; which should make like our human neurones and have just been trying to apply it to various tasks to learn what I may. Though it is really different than the maths that underpins normal artificial intelligences. It has lots of waves and its a lot more expressive.

A key differences between neurones in artificial neural networks is the fact they are not waves they take a input from the linear algebra and apply a function that ensures non linearity which makes the maths "squishy" (I have literally used that term used in text books, but if you know the maths it is really apt).

In each case a ANN activation function takes a input and the output is shown on graphs like the ones below.

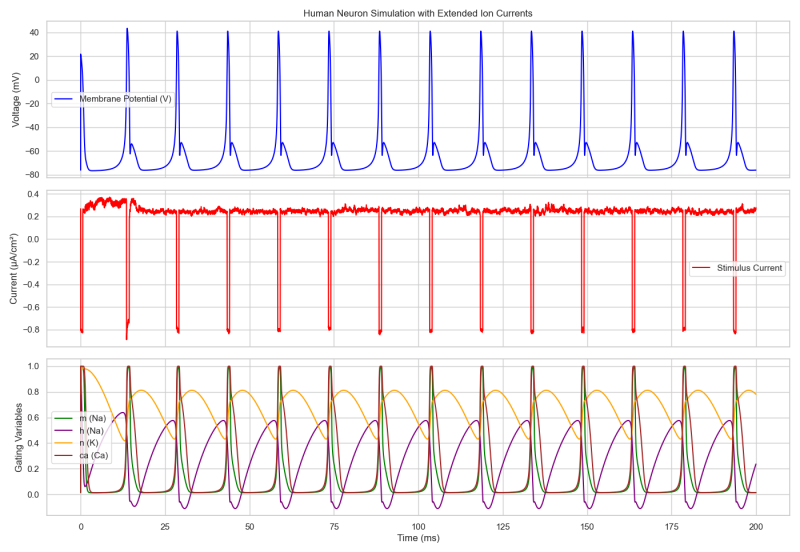

Though unlike biological neural networks Artificial Neural Networks (ANN) do not have any wave like features and they could not form something like the below where they have intrinsic wave like properties. So I have been trying to get my head around what creates that in neural networks and experimenting as a learning exercise. All graphs are taken on a and pardon the poor metaphor of "live" neurones mid training,

Biological neurones are like waves they take a input and whether they will do anything is reliant on it's whole recent history. It is likely though to be spikey and wave-y.

A Picture Is Worth a 1000 Words

I came up with about 4 different math models that where stable as a lot of the possible mathematical models do not cycle or do so poorly. The way the models work is the 4 different ion channels affect how the cell depolarise and repolarise so that after it fires it cannot briefly fire again.

Hopefully you can see why the err technical term "spikey" and "wave-y" are applicable.

Some of the maths created different shapes.

This one does not work but i liked the look of it and so threw it in here.

The below is the one that ran the most "hot" it cycled fast and had aggressive calcium concentration spikes so I did the rest of the testing with IT.

Focusing On One Model

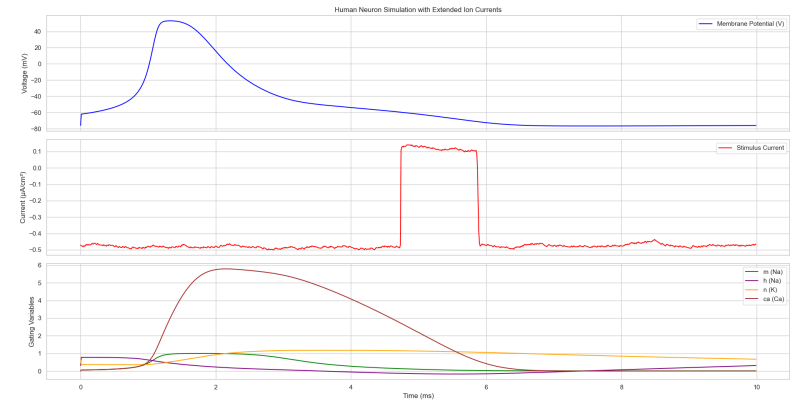

For my learning I then focused in on one of the models and a single neurone during a learning tests. In the way it is typically explained is that a neurone fires and it seems assumed that the firing input lines up with the output firing point. Thought a set of random checks I did seemed to show that the timing becomes decoupled. Now this could be the quality of the simulation but I felt it was a real visual representation that during firing if the input (red line) comes about misaligned with the firing then the connection between them will weaken.

You can see the firing (the blue wave() comes like a rolling wave and the ion channels (the 4 different colours on the bottom) cycle round and round each other.

I felt something that is not explained well is that we know that at high activity a human neurone fires at a rate of 120 hertz or 120 times a second for the layperson. If in that state two neurones fired at the same time and the same way then they would have to be almost identical and therefore what would the benefit to the network be to have multiple copies? It seems likely that the internal cycling of the neurone is in some sense a wave or oscillating spiking system that is being sped up or slowed down by other neurones.

The traditional artificial neural network therefore is likely very low level model and might be fundamentally different than biological neurones which from this would seem to rely on frequency and rhythm of spikes within its system fairly decoupled from how our AI works and it is not a simple thing to envision if you built AI like this how would it do classical computation with waves (or maybe that is just me).

In Data

So that is interesting collecting data on this neurone it tends to fire rather than not fire in a 0.1 millisecond window. To put this in perspective one cycle of the model simulates 0.01 milliseconds. It is worth reflecting on this your brain cycles fast and every neurone in our head might as well be its own little CPU or GPU it is that fast.

Its average voltage in a 1 millisecond window show two voltage area which conformm to the firing above.

The vast majority of them will have a negative voltage spike within that 1 millisecond window.

And the max voltage is a very short sharp 60 -70 volt firing.

Increasing to 5 millisecond window

Changing the window of experimentation to around 0.5 milliseconds you can see the network does slow down and increase speed minutely by 1 or so Hertz. Though that would place it firing several thousand times a second so I think that is on me as that seems greater than the human maximum which I found anecdotally to be around 350 or 500-600 hertz.

So ideally to get a better simulation I should try and put some breaks on there but I am focused on computational use currently and I did really choose the one out of the 4 that ran the most hot and I am still waiting on some big testing to go further and it is likely that in our brains the hertz is limited by availability of ion replacement into the cell after polarisation and depolarisation, which is itself seemed to be monitored and controlled by the brain to aid in learning and computation and this test does not simulate that aspect at all.

Conclusion

I really liked this test there are models that I have that do seem to get better with being bigger and these tests where very small. I am hopeful that if I can get them big enough they should be able to slow them selves down a bit from the current excessive hertz. Though I think it was a really fascinating experiment as it really shows a marked difference between how biological neurones work compared to AI and how current AI probably does not have anything particularly like our memories or brain waves.

The problem with too high hertz might be good or bad because in some ways it is likely the biological brain is limited at how fast it can feed sodium, calcium, etc into its neurones and all of this takes energy to open ion gates to replenish the brains. It might be that a neurone removed of this limitation in its simulation might just be ok running hotter. It also might be I need to change the time granularity because these neurones do have a parameter that controls the granularity and scale of the simulation and I could just turn it up a bit.

I think the great thing about learning this is it really shows how weirdly different our brains our compared to these artificial neural networks which we are increasingly told to think of as analogous to our brains. It raises interesting questions in that if you where to assume we needed to develop AI that had similar features its not even clear how you would do maths with these types of waves and apply them to problems and it is likely that the brain deals with frequency spiking and all sorts of things that ANN just do not have to deal with.

We truly are a marvel, when you think about the most complex piece of engineering in the universe is on top your shoulders

Add comment

Comments