"All the worlds a differential equation and the men and women are just variables" - Ben Orlin

So I have been playing around with the Hodgkin and Huxley model and implementing them inside AI trying to predict letters with no inputs. It's a hobby I swear.

I have a interest in knowing exactly how the brain works and one way is looking into differences in ANN like chat GPT and the diffusion models we use to describe individual neurones.

Today we are looking at the code for human neurones.

this is about the models for human neurones. I have my previous post "I simulated squid neurones and taught them English".

this is my "I simulated human neurones and taught them English" starting point. I hope to be back in a couple of weeks.

The below gives a quick way of showing how the activation function for a human neurone is proposed to work. It extends the Hodgkin and Huxley differential equation for a squid neurone by adding a additional Ca ion channel. You can run the code for the activation function in Python but I'm keeping trainning methods to myself.

Try it at home

import numpy as np

import seaborn as sns

import matplotlib.pyplot as plt

# Define gating variables for a human neuron

def alpha_m(V): return 0.1 * (V + 40) / (1 - np.exp(-(V + 40) / 10))

def beta_m(V): return 4.0 * np.exp(-(V + 65) / 18)

def alpha_h(V): return 0.07 * np.exp(-(V + 65) / 20)

def beta_h(V): return 1 / (1 + np.exp(-(V + 35) / 10))

def alpha_n(V): return 0.01 * (V + 55) / (1 - np.exp(-(V + 55) / 10))

def beta_n(V): return 0.125 * np.exp(-(V + 65) / 80)

def alpha_ca(V): return 0.2 * (V + 30) / (1 - np.exp(-(V + 30) / 9))

def beta_ca(V): return 0.1 * np.exp(-(V + 40) / 10)

# Initialize neuron parameters

C_m = 1.5 # Membrane capacitance, uF/cm^2

g_Na = 120.0 # Sodium maximum conductance, mS/cm^2

g_K = 36.0 # Potassium maximum conductance, mS/cm^2

g_L = 0.3 # Leak maximum conductance, mS/cm^2

g_Ca = 2.0 # Calcium maximum conductance, mS/cm^2

E_Na = 50.0 # Sodium reversal potential, mV

E_K = -77.0 # Potassium reversal potential, mV

E_L = -54.387 # Leak reversal potential, mV

E_Ca = 140.0 # Calcium reversal potential, mV

# Time setup

dt = 0.01 # Time step, ms

t_max = 200 # Max time, ms

time = np.arange(0, t_max, dt)

# Stimulus current

I_stim = np.zeros(len(time))

I_stim[500:1500] = 1 # Stimulus applied from 5 ms to 15 ms

# Initialize variables

V = -70.0 # Resting membrane potential, mV

m = alpha_m(V) / (alpha_m(V) + beta_m(V))

h = alpha_h(V) / (alpha_h(V) + beta_h(V))

n = alpha_n(V) / (alpha_n(V) + beta_n(V))

ca = alpha_ca(V) / (alpha_ca(V) + beta_ca(V))

# Arrays to store results

V_trace = np.zeros(len(time))

m_trace = np.zeros(len(time))

h_trace = np.zeros(len(time))

n_trace = np.zeros(len(time))

ca_trace = np.zeros(len(time))

# Simulate dynamics

for i, t in enumerate(time):

# Ionic currents

I_Na = g_Na * m**3 * h * (V - E_Na)

I_K = g_K * n**4 * (V - E_K)

I_Ca = g_Ca * ca * (V - E_Ca)

I_L = g_L * (V - E_L)

I_ion = I_stim[i] - (I_Na + I_K + I_Ca + I_L)

# Update membrane potential

V += dt * I_ion / C_m

# Update gating variables

m += dt * (alpha_m(V) * (1 - m) - beta_m(V) * m)

h += dt * (alpha_h(V) * (1 - h) - beta_h(V) * h)

n += dt * (alpha_n(V) * (1 - n) - beta_n(V) * n)

ca += dt * (alpha_ca(V) * (1 - ca) - beta_ca(V) * ca)

# Store results

V_trace[i] = V

m_trace[i] = m

h_trace[i] = h

n_trace[i] = n

ca_trace[i] = ca

# Plot results with Seaborn

sns.set(style="whitegrid")

fig, axes = plt.subplots(3, 1, figsize=(14, 10), sharex=True)

# Membrane potential

sns.lineplot(x=time, y=V_trace, ax=axes[0], color='blue', label='Membrane Potential (V)')

axes[0].set_title('Human Neuron Simulation with Extended Ion Currents')

axes[0].set_ylabel('Voltage (mV)')

axes[0].legend()

# Stimulus current

sns.lineplot(x=time, y=I_stim, ax=axes[1], color='red', label='Stimulus Current')

axes[1].set_ylabel('Current (µA/cm²)')

axes[1].legend()

# Gating variables

sns.lineplot(x=time, y=m_trace, ax=axes[2], label='m (Na)', color='green')

sns.lineplot(x=time, y=h_trace, ax=axes[2], label='h (Na)', color='purple')

sns.lineplot(x=time, y=n_trace, ax=axes[2], label='n (K)', color='orange')

sns.lineplot(x=time, y=ca_trace, ax=axes[2], label='ca (Ca)', color='brown')

axes[2].set_xlabel('Time (ms)')

axes[2].set_ylabel('Gating Variables')

axes[2].legend()

plt.tight_layout()

plt.show()

It's a cool spiking neurone

I really liked this neurone it's a really cool spiking neurone model. Investigation of it clued me into the blue brain project which is a project to simulate mouse brains.

if you run it in python you can see it spikes but there's residual ripples plus my intial testing shows it's really stubborn and won't spike after it initially spikes. Plus it just runs a bit more safer than the hodgkin Huxley model and it seems harder to get it to create a type of error that cannot be handled.

Smooth like butter

The training has gone well I have packaged it into a neural network and applying the same rough rules from the previous post about squid neurones. And admittedly I'd love to tell you more but I'm spending some more time on that as the simulations have been err interesting and I will leave it at that.

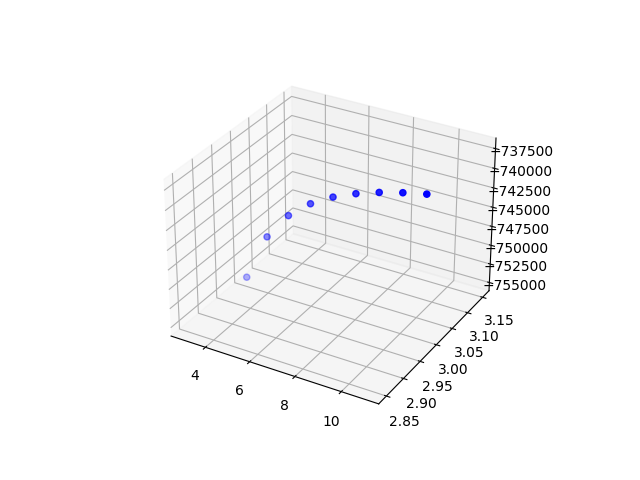

Trying different ways to train it and I have noted where the implementation works a very smooth output when sizing up. This was the minimal training method I had for the squid Hodgkin and Huxley model. I have yet to get it to work extremely better than past Hodgkin and Huxley model tests but I can see that it seems "better" in that there is less spiking in performance.

I also figured out a better way of organising and staking the neurones and currently looking on finding how easy that is to extend it and how big I can get it.

I have a couple of tests running on it right now and started transferring it and the training method into C++ to do larger scale tests.

EDIT: a few more trainning sessions show it really does expand laterally more than the squid neurones. It's bigger smoother and it's error is lower on the impossible tests I set it. It's worked really well.

I think at most I need to collect my thoughts on how different it is from the squid neurones.

I have a human brain simulation running on my PC it felt worth a blog post.... yay?

Add comment

Comments