"Everything has beauty but not everything can see - Confucius

So I am still trying to build concious machine as a side project. I know its quite a common thing now alongside trying to build a traditional roguelike, training to be a data scientist, building a pirate roguelike (don't ask) and learning C++. I came across my own blog recently and realised the numbers I had on it stating the current state of the Hello world project where out of date.

Quick Update

I am at a value of 160k. A large increase on past estimates. I think i was saying hey I can get 900 entropy of intelligence

Then chat GPT happened and I was kind of confused about LLMs for a while.

I was trying to calculate for "machine concious" by using a entropy calculation of 1=10 x more unlikely to happen (i.e. a log 10 value of entropy). The whole point of this project is to build something of undeniable proof of AI intelligence to the world (tall order, i.e. arrogant as all hell, who do you think you are). This is based on a machine trying to functionally follow the text in a book separate from any inputs.

Once you have stopped telling yourself this is impossible an interesting realisation happens A) this would be a great test of memory as absent any input from the books current state any example of learning must arise from a improvement of memory and flow f information in the neural network. B) because no input was received any quanta of information (as per entropy) is received and embedded in the neural network as a process of having in some sense been "concious" of all the previous quanta of information and therefore acts as a definition for "concious".

This definition means a machine if it follows along to a given thing absent stimulus (inputs) is concious by dint of knowing about system B by knowing of changes in itself itself is able to infer from error applied to itself a external world or system. Consciousness might therefore mean the level of externality of the system it is able to infer information about divided by the amount of information in the system itself.

That sounds mad but thinking on the state of your own brain you know you have memory so what we might say as long as you can update and change your own memories we can smuggle information into the future. Therefore if I have memory and that memory is of a continuous and not binary nature I might pass slight hints of a idea beyond. Thing of a missile the coordinates of its target are 10,50 if memory is a purely int value then 10,50 is always 10,50. It does not matter how many times you calculate it its still 10,50.

But if i can store 10.5,50 and that .5 means I do not properly know if the grid coordinates are exactly 10 or 11 then that matters for a missile. But not for a missile 10ft from the ground but maybe at 10,0000ft the missile starts to have cognitive time to explore that .5 is it in 10 or 11? You see what I mean a 10,50 is a certainty a 10.5,50 is incredibly freeing in that 10,000 ft there is doubt there is room to manoeuvre...quite literally as that missile falls to earth it still has time to think and decide is it really 10 or 11?

Its a bit of a "Gnostic" concept and I think often these can lead to error many times someone hears a horse it is a horse not a zebra and the .5 is not helpful but sometimes it is and maybe when talking about AI it really is,

So 160k is good it translates to a 1 divided by a 1 followed by 160k of zeroes. No one will ask me but that's the number it got; hey do not believe you maybe I can run you the data over skype or something..

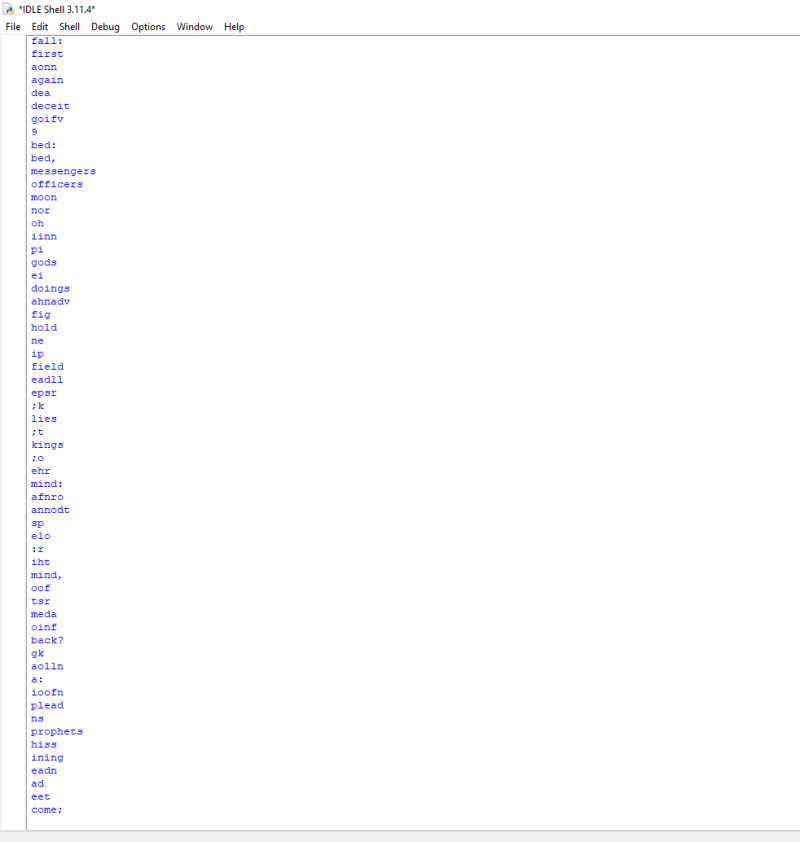

This is what my computer screen looks like

For some time my screen has looked like this just 850-ish tests on machine concious and I can get it to do the below

I have tried

- Differential equations

-Fractal architecture of neural networks

- Non linear warped space within the network

The below includes English words yes the AI with the previous stated problems is able to discern worlds if you stop it and literally stop it. It does not know the next word that will happen though if you leave it alone i knows the next letter and this is enough for it to mathematically know the exact next letter. i.e. this machine knows not know the next part of the story but it know the next letter and with help from a higher guidance it is insanely accurate but without it is nothing.; it is well literally lost, as long as it received an error signal from its "higher self" or "guardian angel" or whatever metaphor you choose; then it will correct itself and it will bizarrely remain insanely accurate to a high degree despite being very small and ought not to have this informational processing capability by itself.

Though having received a correct answer the neural network does correct itself... I still do not know how it does this...

List Of Theories Gone Past

I have kind of blown past a couple of theories without real results.

-Quantum objects at a minimum can be represented as a 3 number value. Maths would tell you can simplify a quantum object to a 3 figure value using double scale precision. At one point I was really into trying to evaluate the entire external system of the AI as being 3 number points on a rigid quantum scale. I thought maybe the language of god was quantum but I think the thinking I have come to is that a single double in memory is 308 points of significance i.e. it can hold 1 followed by 308 zeroes or .1 preceded by 308 zeroes. i.e. within a computers memory there is enough space to figure each value as being infinitely large or small and that for a neural network within its space f possible responses it might define a given response with many number as a subset of previous layers of response3s -- i.e. even a small neural network has a range of possible values as a output much greater than you can imagine. That is really confusing for a AI to map to and I think a lot of solution like attention what they really do is simplify the number of "states" a neural network can map to in order to make computation simpler.

-Fractals: Goddam fractals I have realised its not useful to keep the density of different layers the same. I used to believe that the best min was some approximate value between a few values of connectivity .i.e. a given layer ought to have a certain level of connectivity between the preceding and following layer of neurones

My Big problem

Current work seems to work but has no future predicative values i.e. the current AI efficiency drops of the cliff when the music stops; which is to say the error correction in this AI is the input and as long ads that happens it "dreams" a external world; This sounds really Gnostic and hippy except... I have a scary idea that kind of wrecks simulation theory and Gnosticism because my AI are really smart being very efficient while the music plays but when the music stops they err also stop.

i.e. they do not know what happens next but having been corrected by the "real" they are very capable of being somewhat of a facsimile of the "real". Which I think is a beautiful idea; your not god. You might have a idea of what is happening outside yourself if you shut up and listen.

And I think id love top report the opposite that they carried on the story but painfully they do not yet; they just stop and wait the external stimulus. So I I can stop them and despite being highly developed they do not know what happens next only they can recognise the external change internalise it and match to the existing patterns. Which I think is beautiful in its own way as; wake up sunshine the world outside you; its err real. I am surprised as you; Who knew... stop dreaming... pain is just a lesson you are really alive... Bizarrely I can prove it with AI on my laptop.

But yeah I am trying to use it to predict crypto because they give a continuous external signal to match too...

Because in the decadent age we live in of course I am... I also think they will do well and did the API work because if one cannot be enlightened might they be rich. Though; Probably not but you got to try it...

But that is the quote everything has beauty; except it does not see; you have to learn from it. You have to respect it, Like my small AI model it has its beauty it knows the whole world if give just a bit more information it will predict the next word but not the word after that definitely not the word after that because it does not see, and that is the problem of the age we get a whole lot of information we are very intelligent but we do not see. And I am saddened because this machine of mine if it just predicted one more step beyond the immediate future from a missile at 80,000 ft to 60,000 ft it would have been so cool.

Instead I say it did not see... To take our idea of a single concious missile...As long as it has 80,000 ft it might understand the whole right through 60,000 it might actually understand 59,000 ft , but not 58,000 ft; it does not and such absent a single small step it loses all thought and that is enough for me to grasp the nature of external reality. For me that is beautiful and sad. We only see a small while into our future a lot of things are beautiful but do not see the consequences of their own outcomes.

Maybe we are alone in that. AI will not properly be AI while we are alone in that.

Add comment

Comments